Photo by Claudel Rhea ult on Un sprinkle

Orchestrating is an essential thought while making estimations. There are a large number of sorts: bubble sort, shell sort, block sort, brush sort, blended drink sort, mythical person sort — I'm not making these up!

This challenge gives us a short investigate the grand universe of sorts. We really want to sort various numbers from least to generally critical and find out where a given number would have a spot in that display.

Estimation rules

Return the most diminished record at which a value (second conflict) should be installed into a group (first dispute) at whatever point it has been organized. The returned worth should be a number.

For example, getIndexToIns([1,2,3,4], 1.5) should return 1because it is more significant than 1 (document 0), yet under 2 (record 1).

Also, getIndexToIns([20,3,5], 19) should return 2because once the show has been organized it will look like [3,5,20] and 19 is under 20 (document 2) and more critical than 5 (record 1).

Assuming Test Cases

get Index To Ins([10, 20, 30, 40, 50], 35) should bring 3 back.

get Index To Ins([10, 20, 30, 40, 50], 35) should return a number.

get Index To Ins([10, 20, 30, 40, 50], 30) should bring 2 back.

get Index To Ins([10, 20, 30, 40, 50], 30) should return a number.

get Index To Ins([40, 60], 50) should bring 1 back.

get Index To Ins([40, 60], 50) should return a number.

get Index To Ins([3, 10, 5], 3) should bring 0 back.

get Index To Ins([3, 10, 5], 3) should return a number.

get Index To Ins([5, 3, 20, 3], 5) should bring 2 back.

get Index To Ins([5, 3, 20, 3], 5) should return a number.

get Index To Ins([2, 20, 10], 19) should bring 2 back.

get Index To Ins([2, 20, 10], 19) should return a number.

get Index To Ins([2, 5, 10], 15) should bring 3 back.

get Index To Ins([2, 5, 10], 15) should return a number.

get Index To Ins([], 1) should bring 0 back.

get Index To Ins([], 1) should return a number.

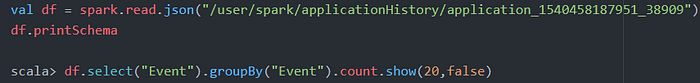

Course of action #1: .sort( ), .record Of( )

PEDAC

Getting a handle on the Problem: We have two data sources, a display, and a number. We need to return the rundown of our input number after it is organized into the data display.

Models/Test Cases: The extraordinary people at free Code Camp don't tell us in what heading the data display should be organized, yet the gave tests explain that the information group should be organized from least to generally conspicuous.

Notice that there is an edge case on the last two gave tests where the data bunch is an empty show.

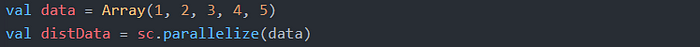

Data Structure: Since we're ultimately returning a document, remaining with bunches will work for us.

We will utilize a cunning strategy named .record Of():

.record Of() profits the principal document at which a part is accessible in a display, or a - 1 if the part is missing in any capacity. For example:

let food = ['pizza', 'frozen yogurt', 'chips', 'frankfurter', 'cake']

food. list Of('chips')

// brings 2 back

food .list Of('spaghetti')

// returns - 1

We're moreover going to use .concat () here as opposed to .push(). Why? Since when you add a part to a group using .push (), it returns the length of the new display. Right when you add a part to a bunch using .concat (), it returns the new display itself. For example:

let group = [4, 10, 20, 37, 45]

cluster .push(98)

// brings 6 back

cluster. con feline (98)

// returns [4, 10, 20, 37, 45, 98]

Estimation:

Install num into arr.

Sort arr from least to generally unmistakable.

Return the record of num.

Code: See under!

Without adjacent factors and comments:

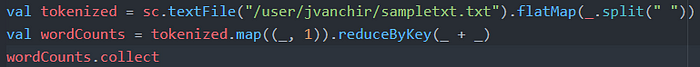

Plan #2: .sort( ), .track down Index ( )

PEDAC

Sorting out the Problem: We have two information sources, a show, and a number. We need to return the record of our criticism number after it is organized into the data display.

Models/Test Cases: The extraordinary people at free Code Camp don't tell us in what heading the data bunch should be organized, but the gave tests explain that the data display should be organized from least to generally conspicuous.

There are two edge cases to consider with this game plan:

If the data bunch is unfilled, we truly need to return 0 considering the way that num would be the principal part in that show, in this way at record 0.

If num would have a spot toward the completion of arr organized from least to generally imperative, then, we need to return the length of arr.

Data Structure: Since we're finally returning a record, remaining with shows will work for us.

We should checkout .track down Index() to figure out how it's ending up assisting with handling this test:

.track down Index() returns the record of the vital part in the display that satisfies the gave testing ability. Anyway, it returns - 1, exhibiting no part completed the evaluation. For example:

let numbers = [3, 17, 94, 15, 20]

numbers .track down Index ((current Num ) => current Num % 2 == 0)

// brings 2 back

numbers. track down Index((current Num) => current Num > 100)

// returns - 1

This is significant, taking everything into account because we can use .track down Index() to balance our criticism num with each number in our input arr and figure out where it would fit all together from least to generally essential.

Estimation:

Expecting that arr is an unfilled show, bring 0 back.

In the event that num has a spot close to the completion of the organized show, return the length of arr.

Anyway, return the document num would be if arr was organized from least to generally critical.

Code: See under!

Without adjacent factors and comments:

If you have various game plans and also thoughts, compassionately offer in the comments!

This article is a piece of the series free Code Camp Algorithm Scripting.

This article references free Code Camp Basic Algorithm Scripting: Where do I Belong.

You can follow me on Medium, LinkedIn, and GitHub!